In early 2026, a new open-source AI coding model family named IQuest-Coder-V1 entered the developer ecosystem with strong benchmark results and an unconventional training philosophy. Developed by IQuest Lab, IQuest Coder aims to move beyond static code generation toward a deeper understanding of how real software is written, modified, debugged, and improved over time.

Rather than training solely on finished code snapshots, IQuest Coder emphasizes software evolution—learning from commit histories, refactors, and real development workflows. This shift makes it one of the more interesting open-source coding LLMs released so far.

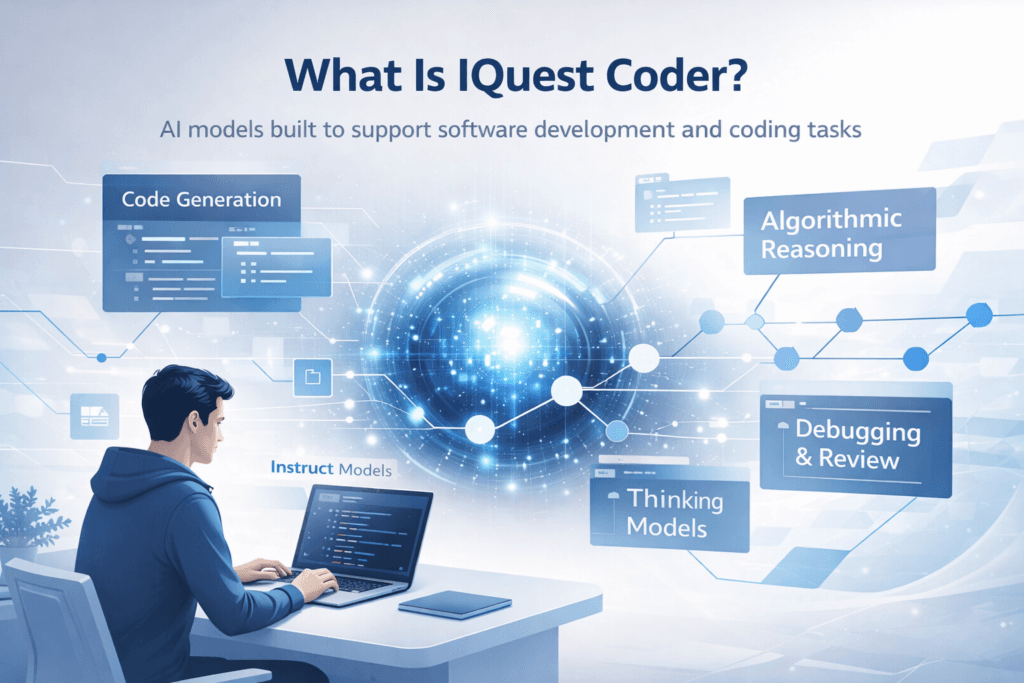

What Is IQuest Coder?

IQuest Coder is a family of large language models purpose-built for software engineering tasks. It supports a wide range of developer workflows, including code generation, debugging, refactoring, documentation, and algorithmic reasoning.

The model family is available in multiple sizes and variants to suit different needs:

Model Sizes

- 7B parameters

- 14B parameters

- 40B parameters

Model Variants

- Instruct models – optimized for direct coding assistance and instruction following

- Thinking models – trained with additional reasoning-focused reinforcement learning for complex, multi-step problem solving

- Loop variants – use a recurrent transformer architecture to improve inference efficiency while maintaining performance

All variants are openly available through Hugging Face, making them accessible for research, self-hosting, and production experimentation.

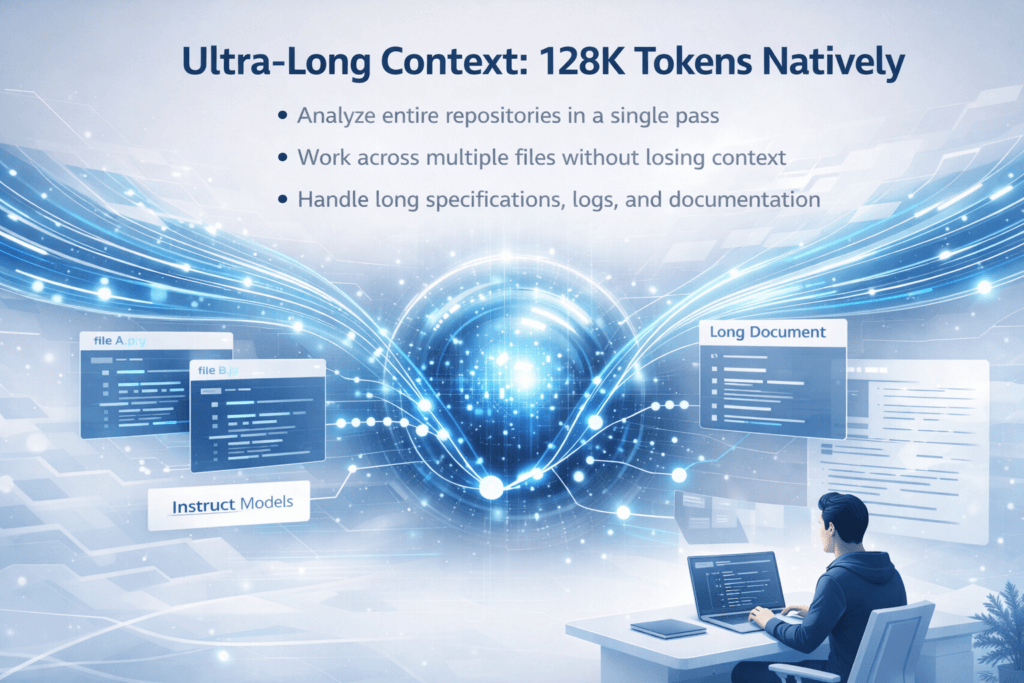

Ultra-Long Context: 128K Tokens Natively

One of IQuest Coder’s most practical strengths is its native 128K token context window. This allows the model to:

- Analyze entire repositories in a single pass

- Work across multiple files without losing context

- Handle long specifications, logs, and documentation

For real-world development—where problems rarely fit into a few thousand tokens—this is a significant advantage over traditional code models.

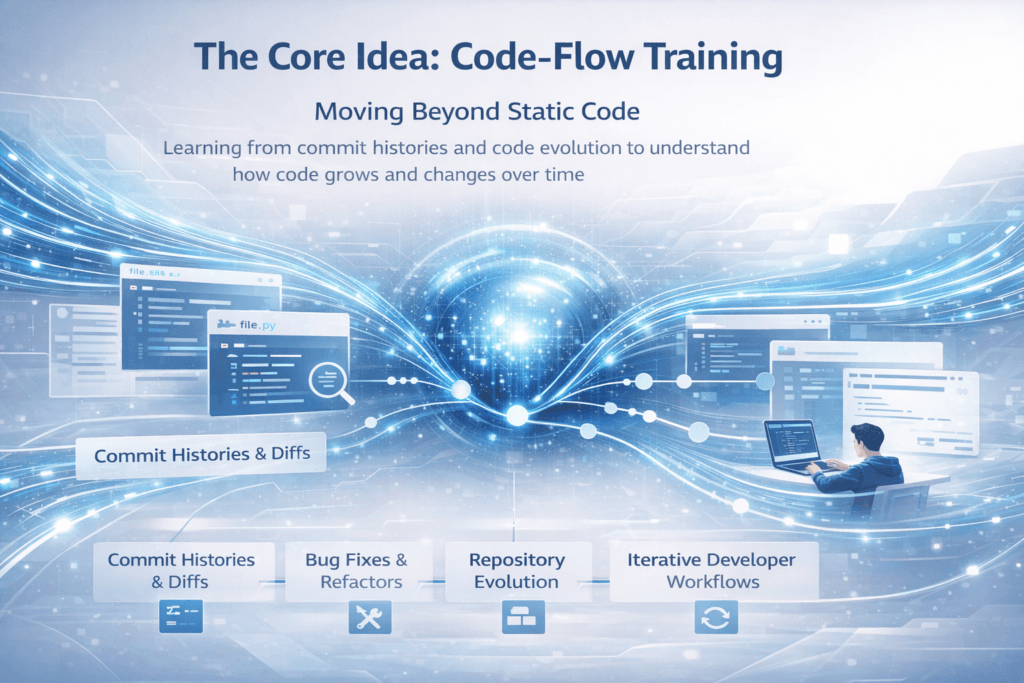

The Core Idea: Code-Flow Training

Moving Beyond Static Code

Most code LLMs are trained on static snapshots of source files. While effective for syntax and common patterns, this approach ignores how code actually changes during development.

IQuest Coder introduces a Code-Flow training paradigm, which focuses on the process of software development rather than just the final output.

What the Model Learns From

- Commit histories and diffs

- Bug fixes and refactors

- Repository-level evolution

- Iterative developer workflows

By observing how code changes over time, the model learns not just what code looks like, but why it changes—providing more context-aware assistance for debugging, refactoring, and future planning.

Benchmark Performance (With Important Context)

On SWE-bench Verified, a widely used benchmark for evaluating real-world software engineering tasks:

- An early evaluation reported 81.4%

- After identifying and removing an evaluation-time environment leakage related to git history access, the corrected and official score is 76.2%

This corrected score places IQuest-Coder-V1-40B as competitive with leading proprietary and open-source coding models, especially considering its parameter count and open availability.

Importantly, the authors have publicly acknowledged the issue and emphasized the corrected results—adding credibility and transparency to the project.

Key Technical Features

1. Multi-Stage Code-Flow Training

Instead of treating each file independently, the training pipeline captures development workflows across time. This helps the model:

- Suggest meaningful next steps, not just valid syntax

- Assist with refactoring and debugging

- Recognize long-term design patterns

2. Instruct vs. Thinking Specialization

- Instruct models are optimized for speed and practical coding tasks

- Thinking models focus on deeper reasoning, ideal for algorithms, competitive programming, and complex logic

Developers can choose the variant that best fits their workflow.

3. Loop Architecture for Efficiency

The Loop variants use a recurrent transformer design with shared parameters across layers, improving inference efficiency—especially useful for deployment and self-hosting.

4. Modern Attention Design

The models incorporate techniques such as Grouped Query Attention (GQA) to balance performance and computational efficiency during inference.

Practical Use Cases

IQuest Coder is well-suited for:

- ✔ Code generation and review

- ✔ Debugging and refactoring assistance

- ✔ Algorithm design and competitive programming

- ✔ Documentation and explanation generation

- ✔ Teaching and learning programming concepts

Because the models are open-source, teams can integrate them into IDEs, CI pipelines, or internal developer tools without vendor lock-in.

Limitations to Keep in Mind

Despite its strengths, IQuest Coder has clear limitations:

- Generated code is not executed—outputs must be tested in real environments

- Performance may vary on highly specialized frameworks or niche domains

- Reasoning-heavy variants can be slower for long outputs

These constraints are common across current code LLMs and are not unique to IQuest Coder.

Why IQuest Coder Matters

IQuest Coder’s real contribution isn’t just its benchmark scores—it’s how the model is trained.

By shifting focus from static code snapshots to code evolution, the project aligns more closely with how developers actually work. This approach suggests a broader trend for future coding LLMs: models that understand not just what code is, but how it grows, changes, and improves over time.

For developers, students, and AI researchers alike, IQuest Coder offers a compelling glimpse into the next generation of AI-assisted software engineering—open, transparent, and grounded in real development workflows.

Read More

- C Program to Find HCF and LCM of Two Numbers

- String Concatenation in C

- Creating a Snake Game in Python

Code is for execution, not just conversation. I focus on building software that is as efficient as it is logical. At Ganforcode, I deconstruct complex stacks into clean, scalable solutions for developers who care about stability. While others ship bugs, I document the path to 100% uptime and zero-error logic